Alibaba Cloud Unveils Qwen3

April 28, 2025 – Alibaba Cloud’s Qwen team has officially launched Qwen3, a groundbreaking series of large language models (LLMs) that mark a significant leap in artificial intelligence capabilities. Announced today on the Qwen blog, Qwen3 introduces six models ranging from 0.6B to 30B parameters, designed to excel in multilingual processing, reasoning, and coding. With a massive training corpus of 36 trillion tokens across 119 languages and a context window of up to 32k tokens, Qwen3 is poised to redefine open-source AI development.

A Diverse and Powerful Model Lineup

The Qwen3 series includes models tailored for various use cases, from lightweight mobile applications to heavy-duty enterprise tasks. The lineup comprises:

-

Qwen3-0.6B and Qwen3-4B: Compact models optimized for mobile and edge devices, balancing efficiency with performance.

-

Qwen3-8B: A dense 8-billion-parameter model, offering robust capabilities for general-purpose applications.

-

Qwen3-30B-A3B and 30B-A3B-Base: Mixture-of-Experts (MoE) models with 30 billion total parameters and 3 billion active parameters, delivering high efficiency for complex tasks.

-

Qwen3-4B-Base: A foundational model for developers seeking a customizable starting point.

Additionally, an MoE model with 15 billion total parameters and 2 billion active parameters (Qwen3-15B-A2B) is expected to follow, further expanding the series’ versatility.

Unprecedented Training Scale and Multilingual Reach

Qwen3’s training process is a testament to Alibaba Cloud’s ambition. The models were pretrained on a colossal 36 trillion tokens, covering 119 languages, with a focus on high-quality, diverse datasets. This enables Qwen3 to handle multilingual tasks with unprecedented fluency, making it a go-to solution for global applications. The three-stage training pipeline—pretraining, supervised fine-tuning (SFT), and reinforcement learning with human feedback (RLHF)—has been refined to enhance reasoning and coding capabilities, ensuring Qwen3 delivers precise and contextually relevant outputs.

The series also introduces improved Mixture-of-Experts (MoE) stability, allowing for efficient scaling and reduced computational overhead. This is particularly evident in the 30B-A3B models, which leverage MoE architecture to activate only a subset of parameters during inference, boosting speed without sacrificing quality.

Extended Context and Advanced Reasoning

One of Qwen3’s standout features is its 32k-token context window, enabling the models to process and generate responses for lengthy inputs, such as long documents or complex conversations. For specialized applications, Qwen3-8B supports an impressive 128k-token context window, rivaling top-tier proprietary models. This extended context length, combined with scaling-tuned hyperparameters, ensures Qwen3 excels in tasks requiring deep contextual understanding, from academic research to creative writing.

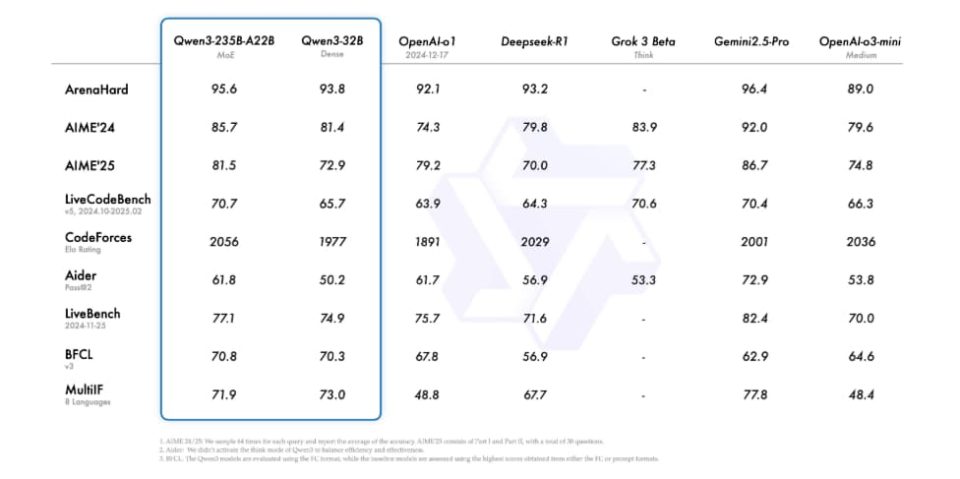

The Qwen team has also prioritized reasoning, with Qwen3-8B showcasing “next-gen reasoning capabilities” that match or surpass competitors like GPT-4o in coding and problem-solving benchmarks. Posts on X highlight Qwen3’s ability to tackle intricate tasks, with users praising its performance in code generation and mathematical reasoning.

Open-Source Commitment and Developer Accessibility

True to Alibaba Cloud’s open-source ethos, Qwen3 models are released under the Apache 2.0 license (except for the 3B model, which uses the Qwen-Research license), making them freely available for research and commercial use, subject to license agreements. The models are accessible on platforms like Hugging Face and ModelScope, with seamless integration into frameworks such as transformers, vLLM, and AutoGPTQ. Developers can leverage Qwen3’s compatibility with quantization techniques (Int4 and Int8) to optimize performance on resource-constrained environments.

The Qwen team has also provided extensive documentation and cookbooks to streamline adoption, covering use cases like voice chatting, video analysis, and omni-modal interactions. A new vLLM version supporting audio output further enhances Qwen3’s multimodal potential, positioning it as a versatile tool for developers building interactive applications.

Community Buzz and Future Prospects

The launch of Qwen3 has sparked excitement across the AI community, with posts on X describing it as a “monster upgrade” and a “game-changer.” The accidental early release of model configurations on ModelScope earlier today fueled anticipation, confirming parameter counts and architectures hours before the official announcement. Users on X have lauded Qwen3’s multilingual support and 128k context window, predicting it will set a new standard for open-source LLMs.

Looking ahead, the Qwen team hints at further releases, including larger models and specialized variants for domains like coding and mathematics. The upcoming code mode on Alibaba’s Tongyi platform, supporting one-click generation of websites, games, and data visualizations, underscores Qwen3’s practical applications. The team is also exploring reasoning-centric models, promising continued innovation in the Qwen ecosystem.

A New Era for Open-Source AI

With Qwen3, Alibaba Cloud reinforces its position as a leader in open-source AI, challenging proprietary giants while empowering developers worldwide. Its massive training scale, multilingual prowess, and advanced reasoning capabilities make Qwen3 a formidable contender in the LLM landscape. As the community begins to explore its potential, Qwen3 is set to drive innovation across industries, from education and research to entertainment and enterprise solutions.

For more details, visit the official Qwen blog at https://qwenlm.github.io/blog/qwen3/ or explore the models on Hugging Face and ModelScope.